The evolution of Compound AI systems

by Jason Yip

In February 2024, Matei Zaharia, CTO of Databricks, et. al, released a paper suggesting the focus on a single Large Language Model (LLM) is no longer enough. The space of Generative AI is evolving every month if not every week. In this article, we will dive into whether this aging article in the lightspeed of the GenAI age is still relevant and how we can continue to stay ahead of the game.

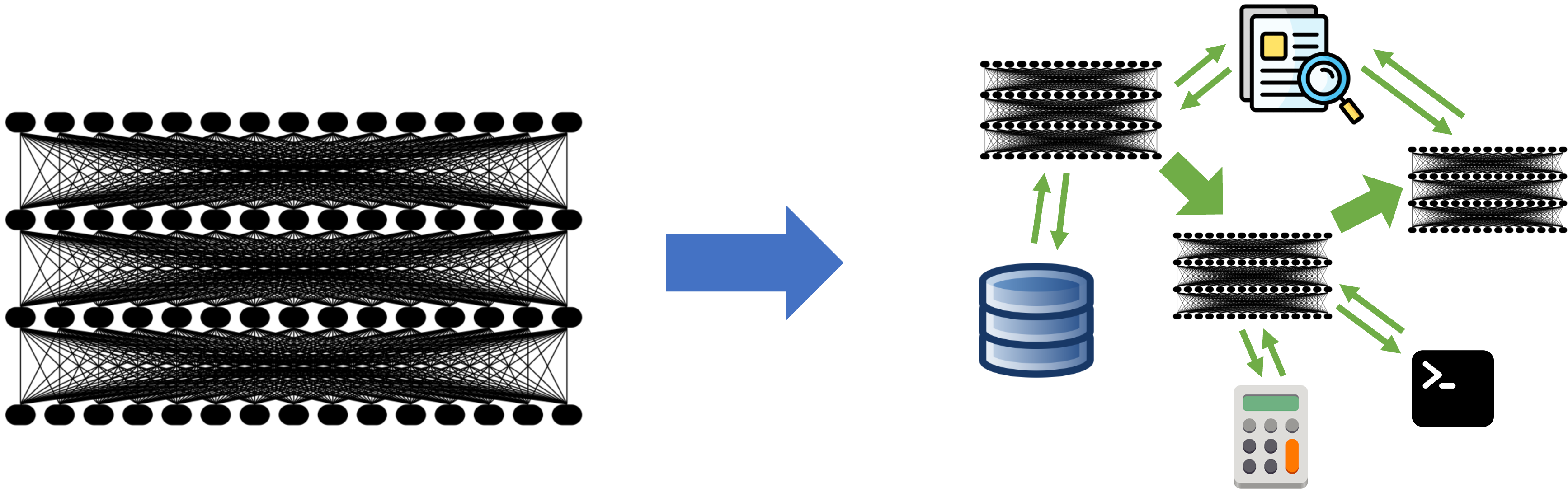

Compound AI Systems

With increasing buzzwords flooding the world around LLM, we must understand that most of the ideas are trying to improve the LLM's accuracy with the following techniques:

- By giving it more clarity or step by step instructions

This is usually done with better prompt engineering techniques or with tools like LangChain and LlamaIndex - By giving it some external tools – think of the MVC pattern in a webapp

This is a classic example of Retrieval Argumented Generation (RAG). In the case of RAG, the LLM is often backed by a vector database, which serves as a retriever in the case the LLM does not have sufficient recent knowledge - By giving it some assistants by the means of another LLM or our favorite user-defined functions

The latest trend in AI Agent systems, where one LLM can't do all the jobs anymore, and we can either bring in a smaller language model or empower an LLM with some user-defined functions – by telling them they exist so the LLM can decide when is the right time to use them

2 and 3 above are examples of Compound AI systems because "compound" only means there is more than one system. The LLM alone can't do its job, and it requires external help.

Databricks functions calling

Functions calling and tools in the context of LLM are interchangeable terms. As its name suggests, we provide the functions for an LLM to use. This is very similar to stored procedures or user-defined functions, the only difference is that now the LLM will decide which function to use instead of explicitly calling it. With these contexts in mind, we understand what's really happening behind the scenes, which makes reading online discussions much easier and allows us to make the right choice when developing LLM applications.

When the purpose of functions calling in mind, we will have a look at how you can use Databricks to both manage and leverage functions calling.

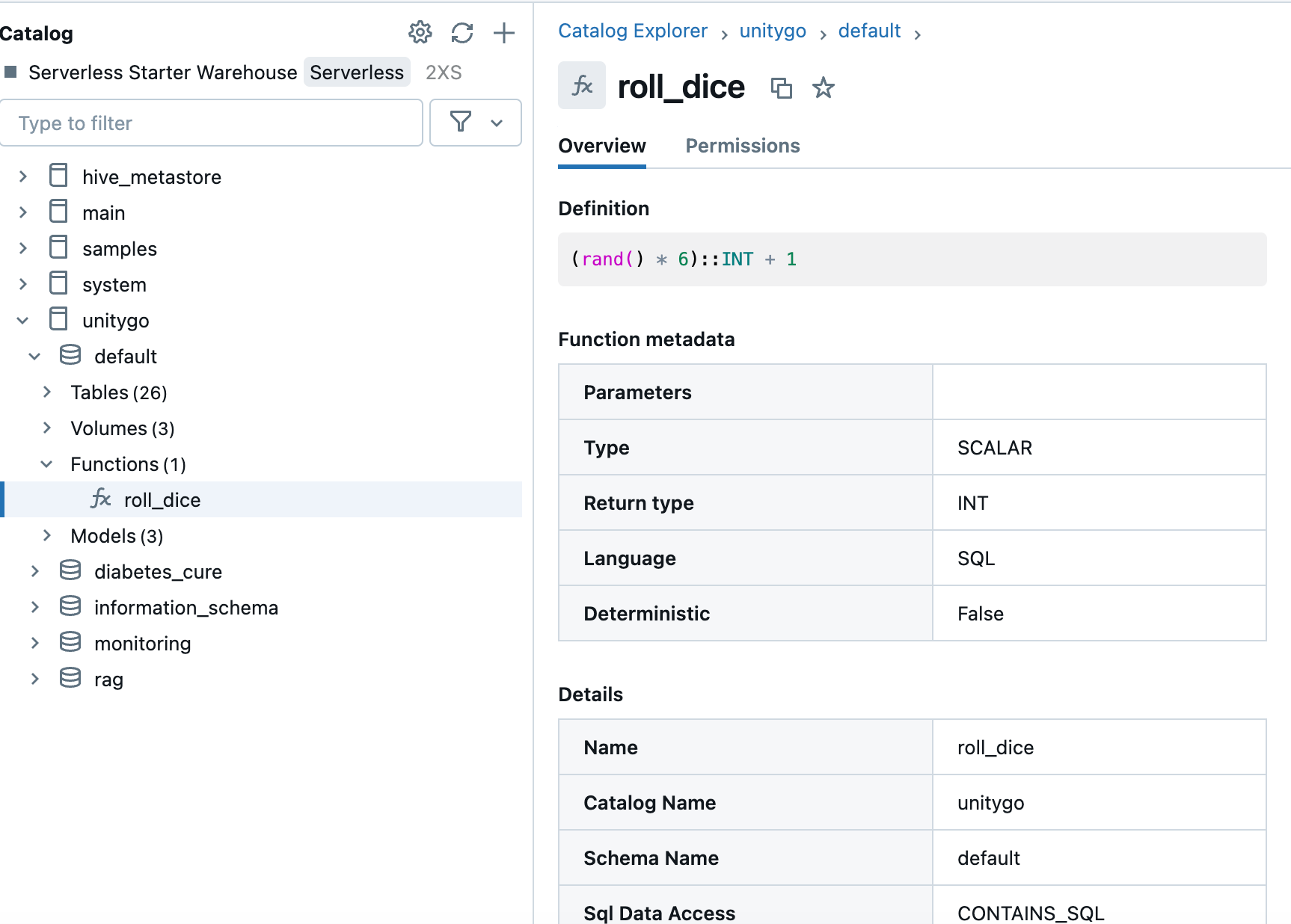

First, we will define a user-defined function. This is not a new concept, but Unity Catalog has built-in governance of UDFs so we can apply fine-grained permissions on the function itself without exposing unneccesary sesnsitive logics to other users, including the LLM.

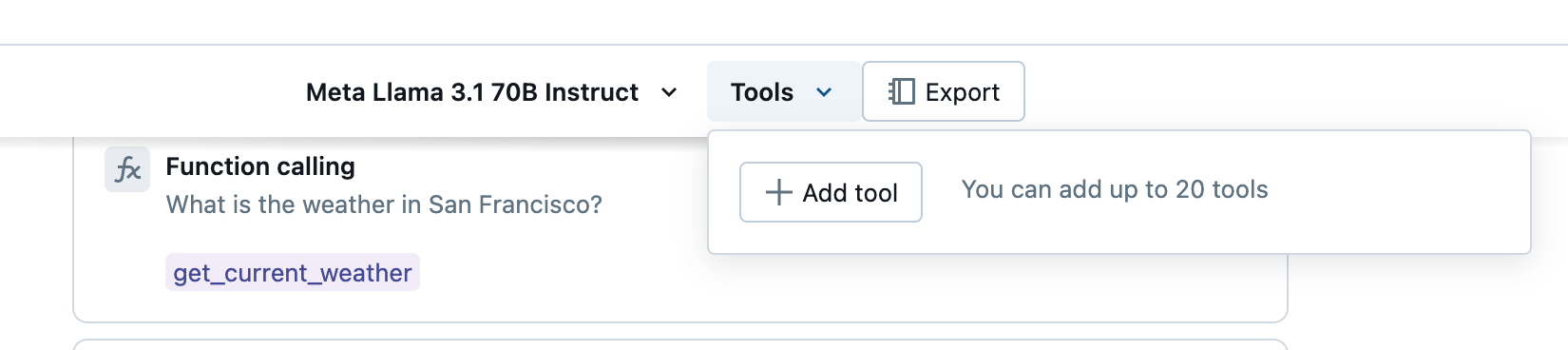

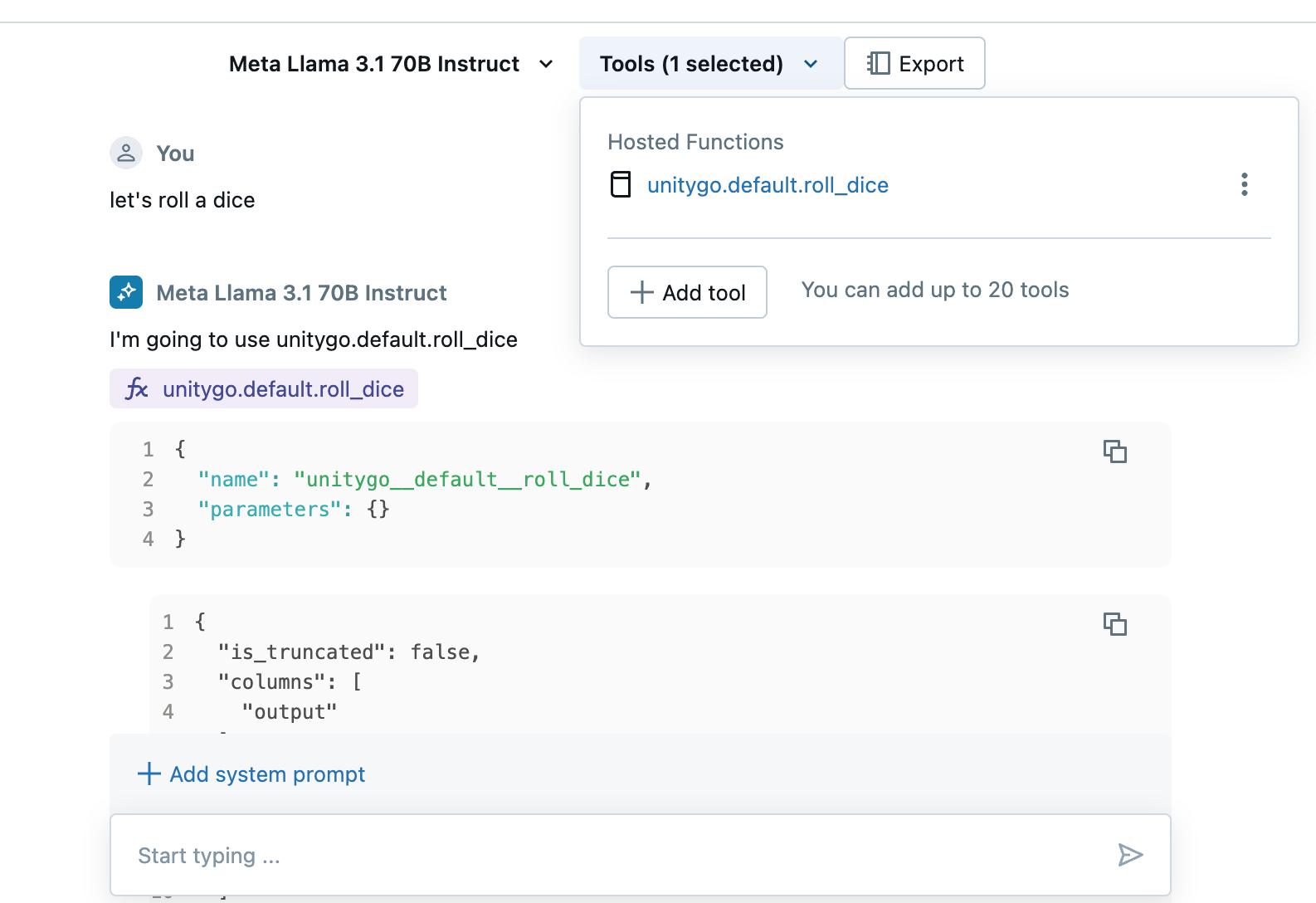

Next, we can try it out in AI Playground in Databricks. Remember that functions calling and tools are interchangeable, so we actually need to find it under "tools".

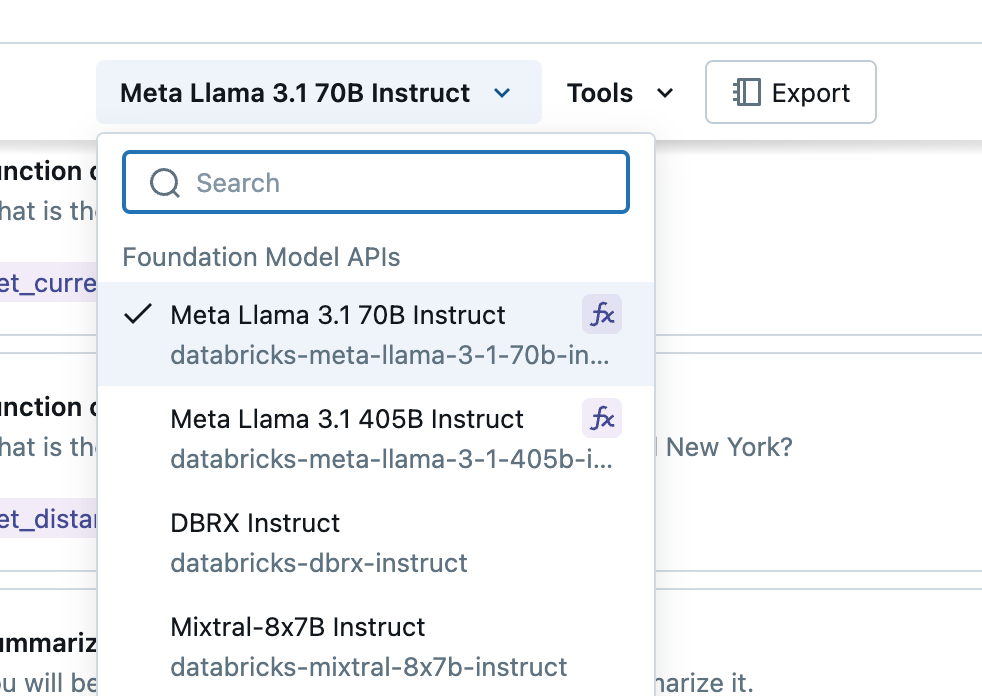

If you are not able to see the Tools option, it means that the model is not in a mature state yet to analyze the functions and make a decision on behalf of you. Databricks has conveniently put a "fx" next to the model to signal that the model is ready for function calling.

Next we will see if the model can leverage our new dice rolling fuction. The prompt is simply "let's roll a dice".

Databricks allows users to add up to 20 tools. While it might sound very limited, but we are only talking about for each prompt, you can allow the LLM to leverge 20 functions. You can programmatically specify them in a configuration file or you can call another function inside a function – the possbilities are endless.

In the world of LLM, there seems to be a leaderboard for everything, so function calling ability will also have their own leaderboard. In fact, if you ever stumbled upon an API documentation, it is not a trivia task to properly leverage these interfaces to generate an answer. Nevertheless, below is the leaderboard mentioned above:

Conclusion

Functions calling might not be a new fancy idea, but coupled with other tools, it can provide powerful agentic ability to the LLM. In the following posts, we will discuss these tools in more detail. These tools include a RAG system (there are multiple design patterns), workflow libraries like LangChain, LlamaIndex and LangGraph, the list goes on. For Enterprises, being able to leverage internal tools (functions) without sending the data to an LLM is also a big win.